I am a senior AI researcher at IPAI Aleph Alpha Research. My main goal is to bring advanced AI methods to applications in the real world, with a method focus on topics related to reinforcement learning and generative AI.

Previously, I was a senior expert on reinforcement learning and activity lead at the Bosch Center for AI and completed my PhD in 2019 at ETH Zurich, for which I received the ELLIS PhD award and held a AI fellowship from the Open Philanthropy Project. I completed research internships at Microsoft Research and Deepmind and was the workflow chair for ICML 2018

Recent News

- Jul. 2024: Publications Chair for ICML 2024 and 2025I

- Jul. 2024: Reinforcement Learning Workshop accepted at ICML 2024

- Jan. 2024: Invited talk at RL Workshop in Mannheim

- Feb. 2024: Invited talk at the RL4AA Workshop

- Sep. 2023: Represented Bosch at the ELLIS Phd Symposium

- Jul. 2023: Invited talk at the Reinforcement Learning Summer School

- Dec. 2022: Invited talk at the NeurIPS trustworthy AI Workshop

- Jul. 2022: Invited talk at IJCAI Workshop on Safe RL

- Feb. 2021: Two papers accepted at ICLR and AISTATS

- Oct. 2021: Invited talk at the Control Seminar, University of Oxford

- Oct. 2021: Outstanding reviewer award for NeurIPS 2021

- Sep. 2021: Invited talk at TU Darmstadt

- Sep. 2021: Panel speaker at IROS workshop on Safe Real-World Robot Autonomy

- Mar. 2021: Guest lecture on safe reinforcement learning as UCSD

Talks and Lectures

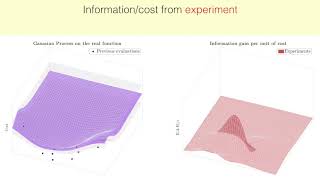

Guest lecture on Safe Bayesian Optimization for CS 159: Data-Driven Algorithm Design at Caltech.

ETH Day 2018 short presentation (in German)

Invited talk at the Workshop on Reliable AI 2017

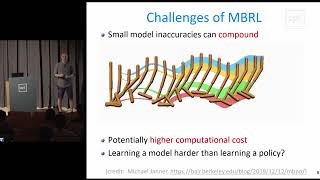

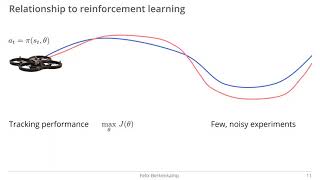

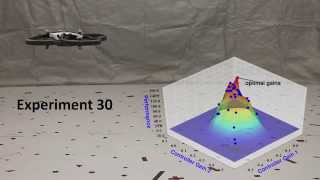

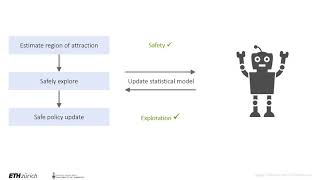

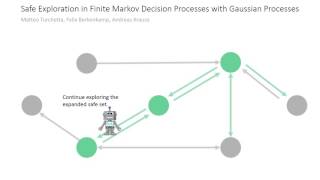

NIPS/CoRL 2017: "Safe Model-based Reinforcement Learning with Stability Guarantees".